IONOS Agent Starter Pack

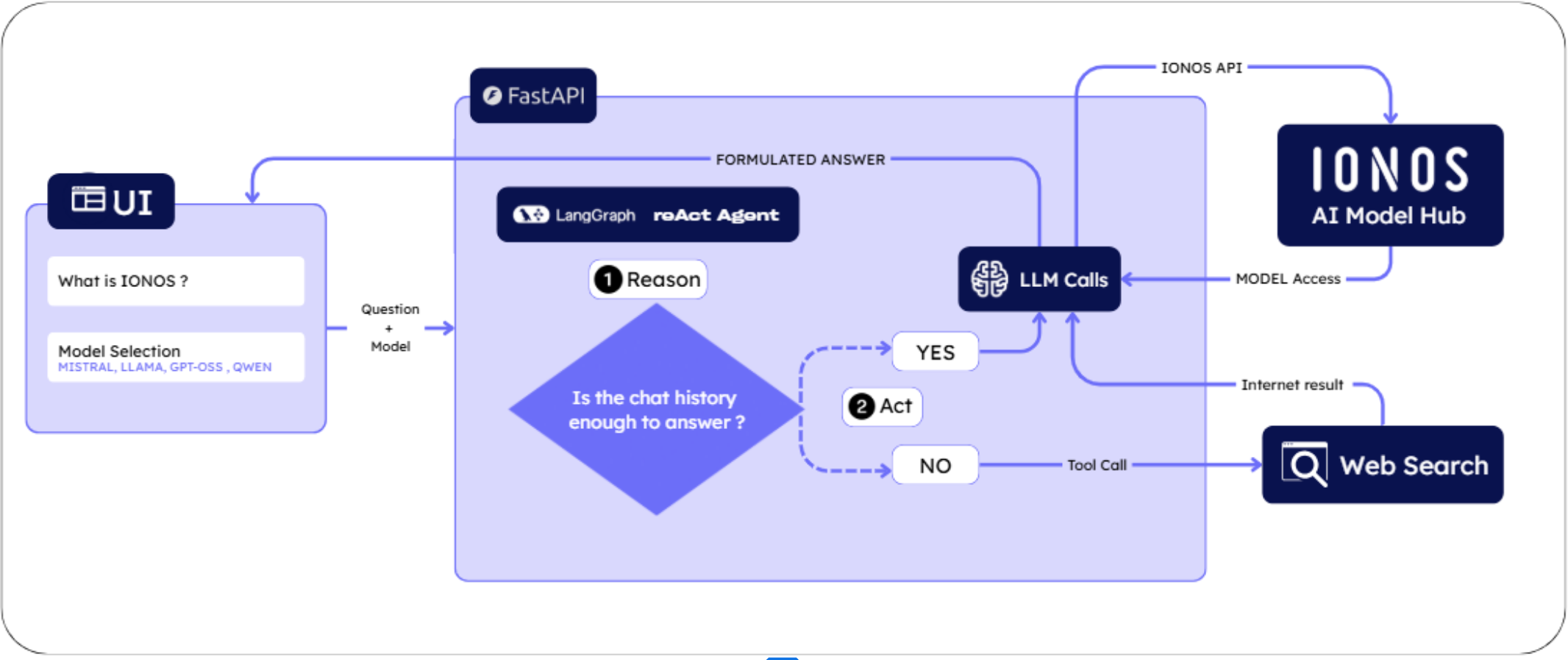

A full‑stack intelligent agent powered by LangGraph ReAct architecture and IONOS AI. It features dynamic web search, context-aware reasoning, and support for both inference models (IONOS Model Hub) and fine-tuned models (IONOS Studio).

What’s inside

- Backend: FastAPI service with a LangGraph ReAct agent (

backend/). - Frontends: Streamlit app (recommended) and Next.js starter (

frontends/). - Docs: MDX documentation site (

docs/).

Features

- Chat with IONOS AI models (Hub inference + Studio fine-tuned) via a simple HTTP API.

- Tools: real-time web search via Tavily API (inference models).

- ReAct Agent: reasoning and acting with dynamic tool selection.

- Streaming support for real-time responses (inference models).

- Easy to add your own tools and fine-tuned models.

Requirements

- Python 3.10+

- Node.js 18+ (only for Next.js frontend)

- IONOS_API_KEY (required for inference models)

- TAVILY_API_KEY (required for web search functionality)

- STUDIO_API_KEY (optional, for fine-tuned models)

Quickstart

- Backend: follow the steps in Backend Setup.

- Choose a frontend:

- Streamlit Overview (recommended)

- Next.js Setup (alternative)

- Select a model type (Inference or Fine-tuned) and start chatting.

- The agent will automatically search the web when needed for inference models.

How it works

Inference Models (Hub): Client sends messages → FastAPI routes to ReAct agent → agent uses tools like web search → model produces context-aware answer with streaming support.

Fine-tuned Models (Studio): Client sends messages with model UUID → FastAPI detects UUID format and routes to Studio API → specialized model produces answer without web search.

Learn more: