Backend API

Base URL: http://localhost:8000

Headers

x-model-id: required. Example:mistralai/Mistral-Small-24B-Instruct.

POST /

Sends conversation messages to the ReAct agent.

Request Body:

{

"messages": [

{"type": "human", "content": "What's the weather like today in Berlin?"}

]

}Supported message types:

"human": User messages (requirescontentfield)"ai": Assistant messages (requirescontentfield)"tool": Tool messages (requirescontentfield, optionalnamefield)

Required Headers:

x-model-id: IONOS model identifier (e.g.mistralai/Mistral-Small-24B-Instruct)

Response: Returns the last message from the agent conversation as a LangChain message object.

Example Response:

{

"content": "The weather in Berlin today is characterized by moderate rain with a temperature of 22.2°C (72.0°F). The humidity is 61%, and there is a slight breeze from the WSW at 17.3 km/h (10.7 mph). The sky is partly cloudy with 50% cloud cover. The wind chill feels like 20.0°C (68.0°F), and the heat index is also 20.0°C (68.0°F). The dew point is at 13.6°C (56.6°F), and visibility is good at 10 km (6 miles). The UV index is low at 1.1, and there are gusts of wind reaching up to 25.7 km/h (16.0 mph).",

"additional_kwargs": {

"refusal": null

},

"response_metadata": {

"token_usage": {

"completion_tokens": 181,

"prompt_tokens": 1006,

"total_tokens": 1187,

"completion_tokens_details": null,

"prompt_tokens_details": null

},

"model_name": "mistralai/Mistral-Small-24B-Instruct",

"system_fingerprint": null,

"id": "chatcmpl-762085b2228b42fb83a4cfd69aecf11e",

"service_tier": null,

"finish_reason": "stop",

"logprobs": null

},

"type": "ai",

"name": null,

"id": "run--fa6dbcb0-2c5d-4f12-bd51-4cd6dfefc81f-0",

"example": false,

"tool_calls": [],

"invalid_tool_calls": [],

"usage_metadata": {

"input_tokens": 1006,

"output_tokens": 181,

"total_tokens": 1187,

"input_token_details": {},

"output_token_details": {}

}

}Note: Frontend applications should extract the content field for the actual message text. The response includes comprehensive LangChain metadata including token usage, model information, and execution details.

GET /studio/models

Retrieve available fine-tuned models from IONOS Studio.

Response: Returns a dictionary mapping model names to their UUIDs.

Example Response:

{

"qwen-gdpr": "uuid-1234-5678-90ab-cdef",

"granite-gdpr": "uuid-abcd-efgh-ijkl-mnop",

"qwen3-sharegpt": "uuid-9876-5432-10fe-dcba"

}Usage:

Frontend applications use this endpoint to populate the fine-tuned model dropdown. When a fine-tuned model is selected, the frontend sends the raw model UUID in the x-model-id header (e.g., 7b19cae7-0983-4a6b-a03c-a647db48f00b), and the backend automatically detects and routes to IONOS Studio API.

Model Routing

The backend supports two types of models and automatically routes based on the x-model-id format:

- IONOS Hub (Inference Models): Provider/name format with slash (e.g.,

mistralai/Mistral-Small-24B-Instruct) - IONOS Studio (Fine-tuned Models): UUID format (e.g.,

7b19cae7-0983-4a6b-a03c-a647db48f00b)

The backend detects Studio models using Python’s uuid module - if the model ID is a valid UUID, it routes to Studio. No prefix needed.

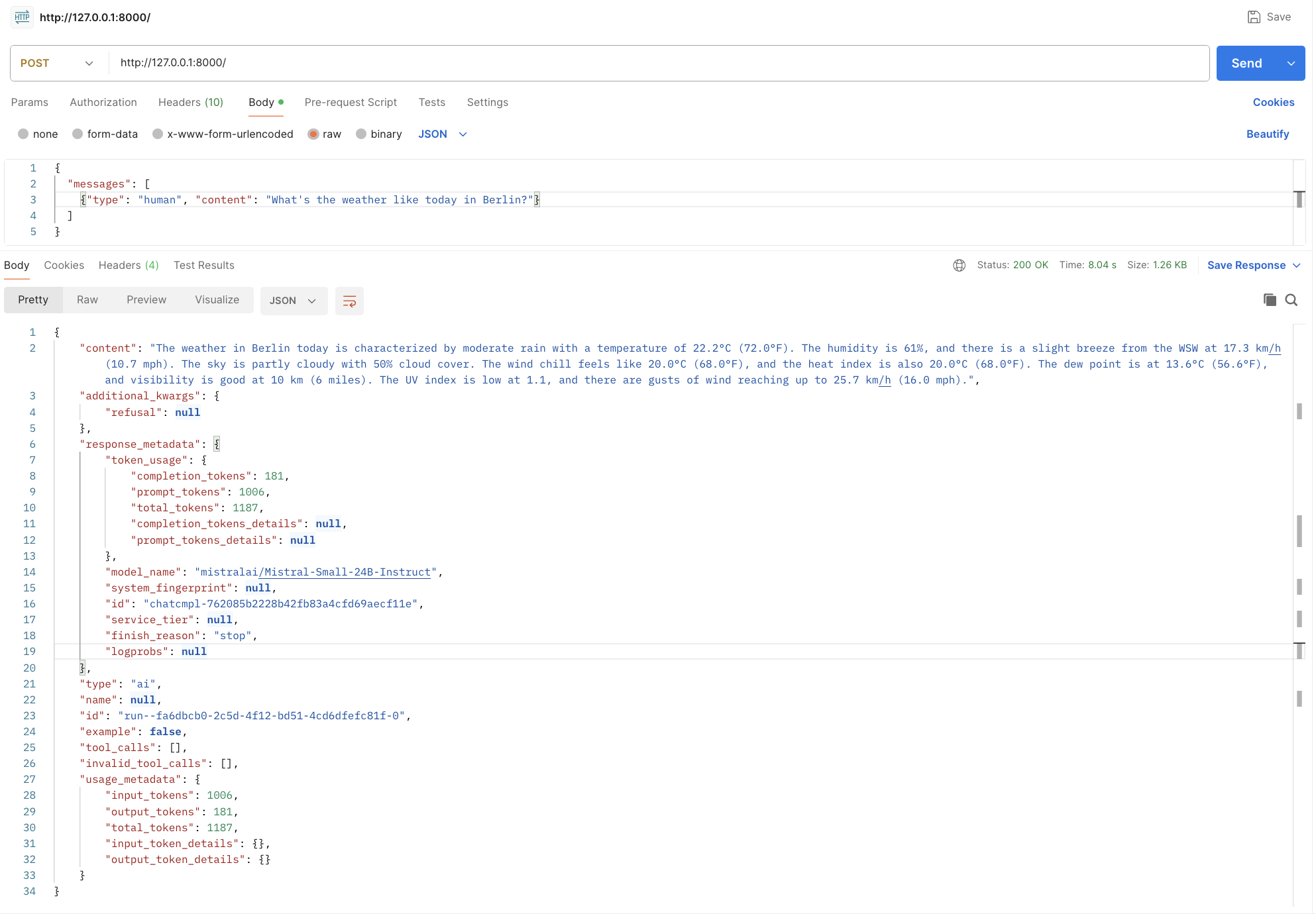

Example Test in Postman

The screenshot above shows a real test of the API endpoint using Postman, demonstrating the exact request payload and response format.